ℹ️ Short Bio

Hi, I’m Yuanzhe, a second year master student at University of Califronia, San Diego 🔱.

I have the great honor of being collaborating with Prof. Yaoqing Yang from CS@Dartmouth College, Julian McAuley from CSE@UC San Diego, Zhiting Hu HDSI@UC San Diego.

My current research is focused on

-

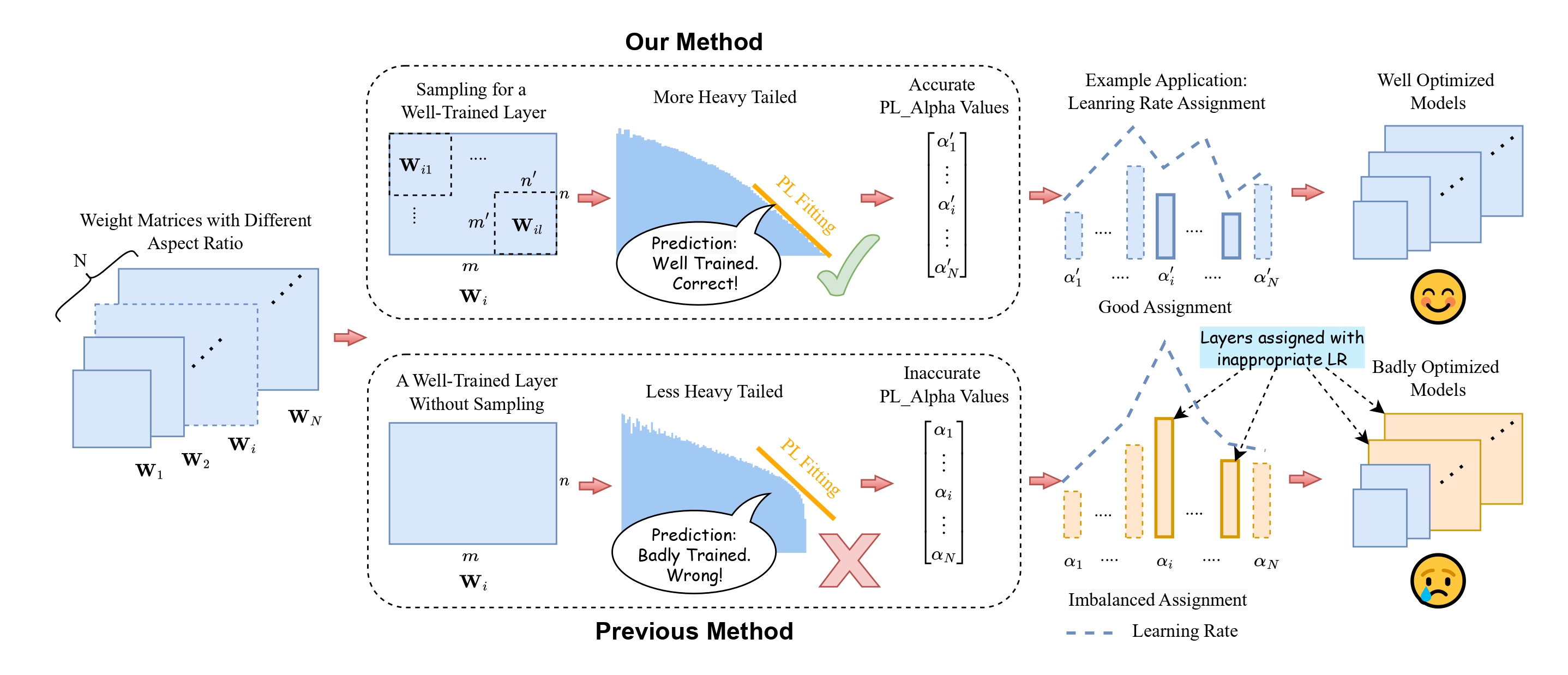

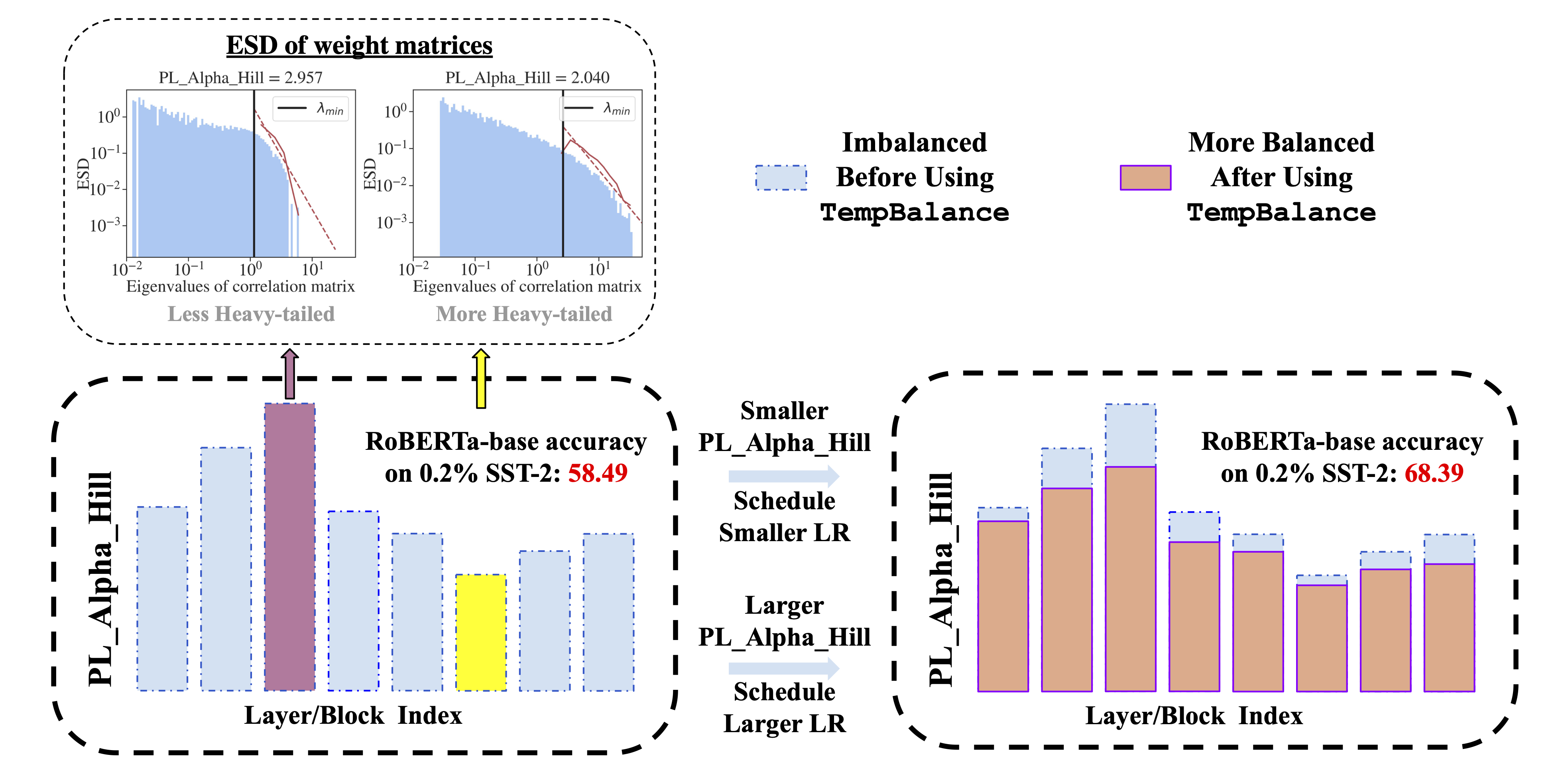

Understanding the mechanisms, training dynamics, and generalization of LLMs and SciML models via mathematical analysis. Building upon this theoretical foundation, We design advanced optimization algorithms to make LLM compression and training more efficient. Previous works include FARMS (ICML 2025) for layer-wise pruning, Model Balancing (EMNLP 2024 Oral) for low-resource fine-tuning.

-

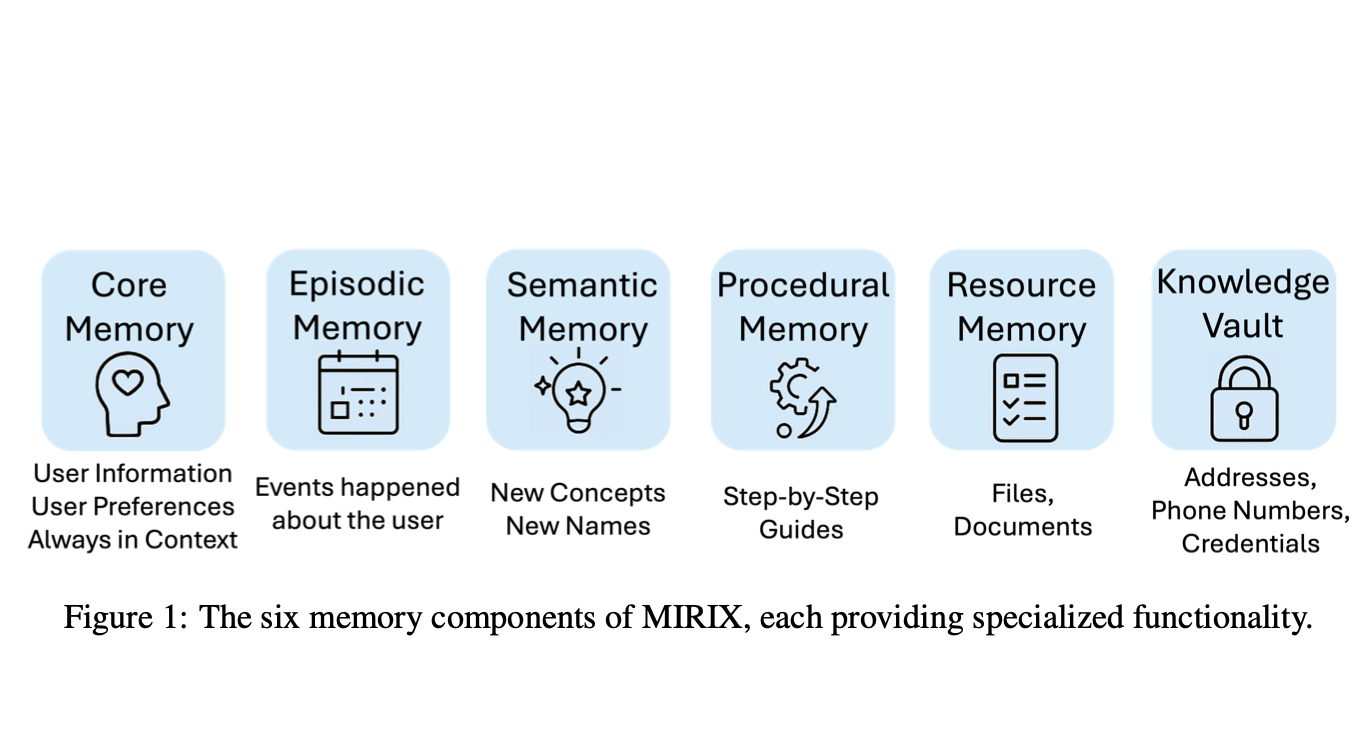

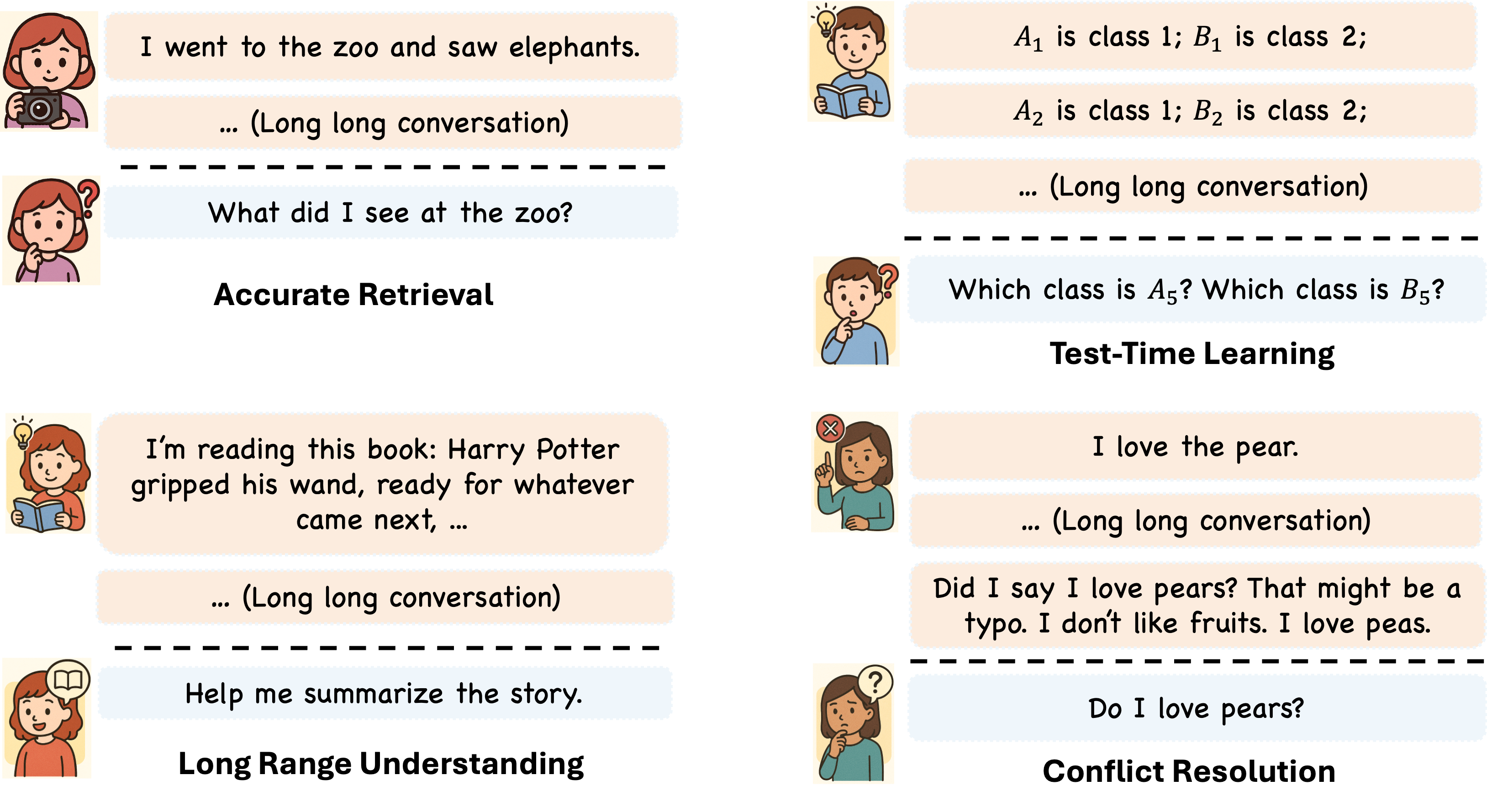

Enhancing Memory and Reasoning in LLMs and Agents, specifically by enabling models to process long-term history and achieve advanced reasoning capabilities through post-training. Previous works include MemoryAgentBench (ICLR 2026), 190+ 🌟 for memory agent comprehensive evaluation, M+ (ICML 2025) for long-term information retention, K2-Think (Tech Report) for large-scale reasoning, MIRIX (Open-source framework, 3K+ 🌟) for multi-agent memory systems, and Mem-alpha (Under Review) for RL-based memory management.

My research leverages mathematical insights into large language models (LLMs) to develop efficient algorithms, while simultaneously unlocking advanced memory and reasoning capabilities in LLMs and AI Agents. I am actively openning to research collaboration inquiries. Feel free to reach out!

🔥 News

- 2026.01: 🎉🎉 Our paper “Evaluating Memory in LLM Agents via Incremental Multi-Turn Interactions” was accepted by ICLR 2026.

- 2025.09: 😁 Excited to share that our recent work “K2-Think: A Parameter-Efficient Reasoning System”.

- 2025.07: 😁 We open-sourced the MemoryAgentBench. Thanks for the great help from Yu Wang!

- 2025.05: 🎉🎉 Two papers are accepted by ICML 2025 as Poster! See you at Vancouver.

- 2024.09: 🎉🎉 Excited to share that our work “Model Balancing Helps Low-data Training and Fine-tuning” is accepted by EMNLP 2024 as Oral Presentation!

- 2024.06: 😁 I graduated from HUST!

- 2024.06: 😄 I created my account on OpenReview!

📖 Educations

|

University of California, San Diego (UCSD) M.S. in Computer Science and Engineering |

2024.09 - 2026.03 (Expected) |

|

Huazhong University of Science and Technology (HUST) B.S. in Artificial Intelligence, Innovation Experimental Honor Class, Qiming School GPA: 3.91/4.0 |

2020.09 - 2024.06 |

⚙️ Research Project

📖 Mathematical Analysis and Optimization on LLMs and SciML Models

# denotes equal contribution

🤔 Enhancing Memory and Reasoning in LLMs and Agents

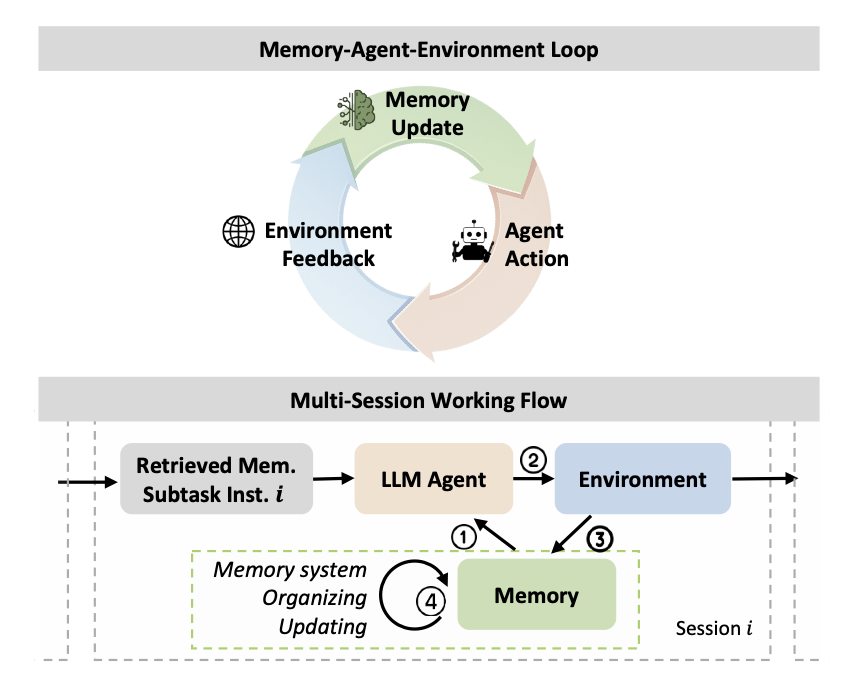

Evaluating Memory in LLM Agents via Incremental Multi-Turn Interactions

{Yuanzhe Hu#, Yu Wang#}, Julian McAuley

ICLR 2026

Short Summary: MemoryAgentBench is a new benchmark designed to comprehensively evaluate memory agents in LLMs.

MemoryArena: Benchmarking Agent Memory in Interdependent Multi-Session Agentic Tasks

{Zexue He#, Yu Wang#, Churan Zhi#, Yuanzhe Hu#, Tzu-Ping Chen#, Lang Yin#}, Ze Chen, Tong Arthur Wu, Siru Ouyang, Zihan Wang, Jiaxin Pei, Julian McAuley, Yejin Choi, Alex Pentland

Under Review

Short Summary: We present MemoryAreana, a new evaluation gym designed to bridge the gap between isolated recall and execution by benchmarking agents on tasks where memory acquisition and action are tightly coupled.

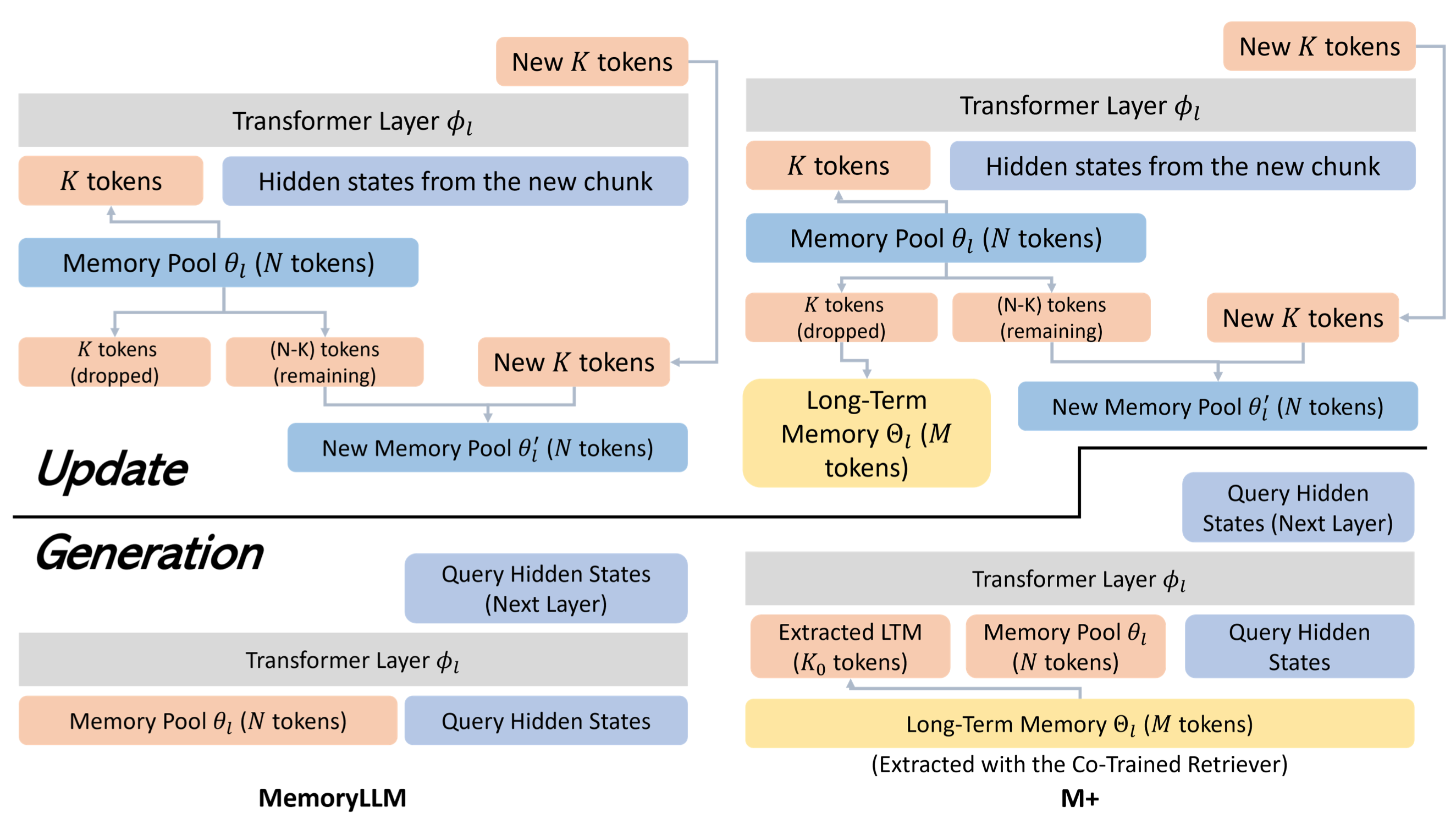

M+: Extending MemoryLLM with Scalable Long-Term Memory

Yu Wang, Dmitry Krotov, Yuanzhe Hu, Yifan Gao, Wangchunshu Zhou, Julian McAuley, Dan Gutfreund, Rogerio Feris, Zexue He

ICML 2025

Short Summary: M+ enhances long-term information retention in LLMs by integrating a retriever-based long-term memory mechanism.

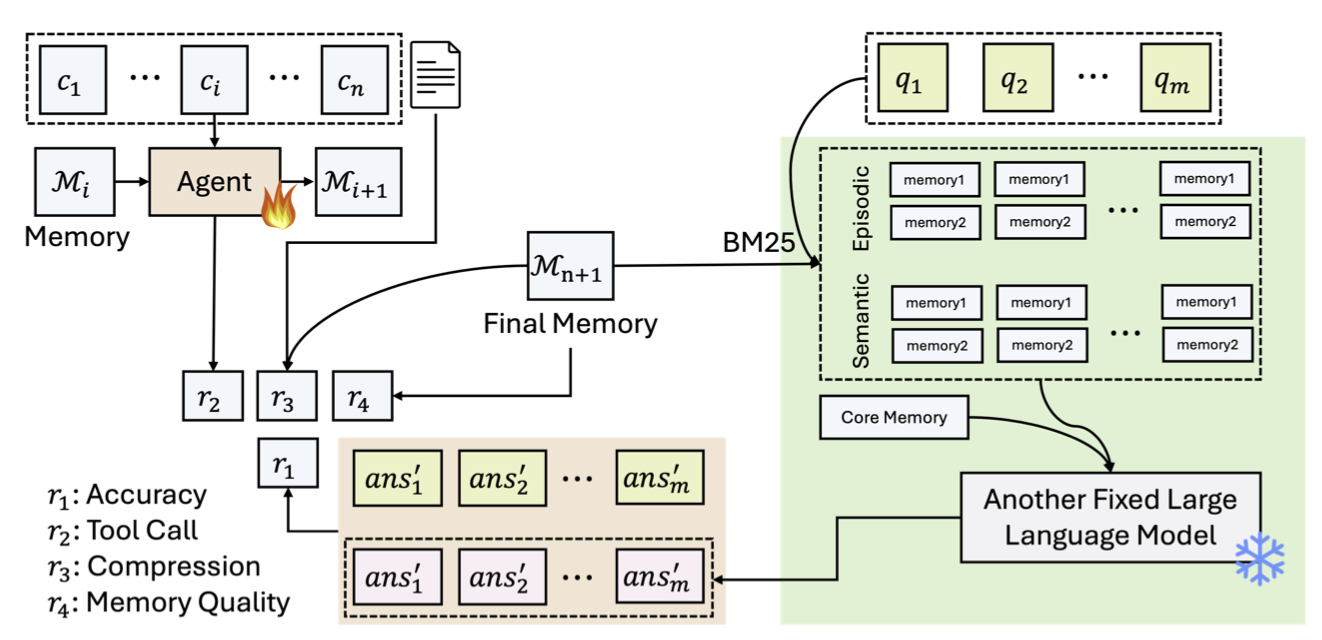

Mem-$\alpha$: Learning Memory Construction via Reinforcement Learning

Yu Wang, Ryuichi Takanobu, Zhiqi Liang, Yuzhen Mao, Yuanzhe Hu, Julian McAuley, Xiaojian Wu

Under Review

Short Summary: Mem-alpha, a reinforcement learning framework, enhances memory management in LLMs through interaction and feedback.

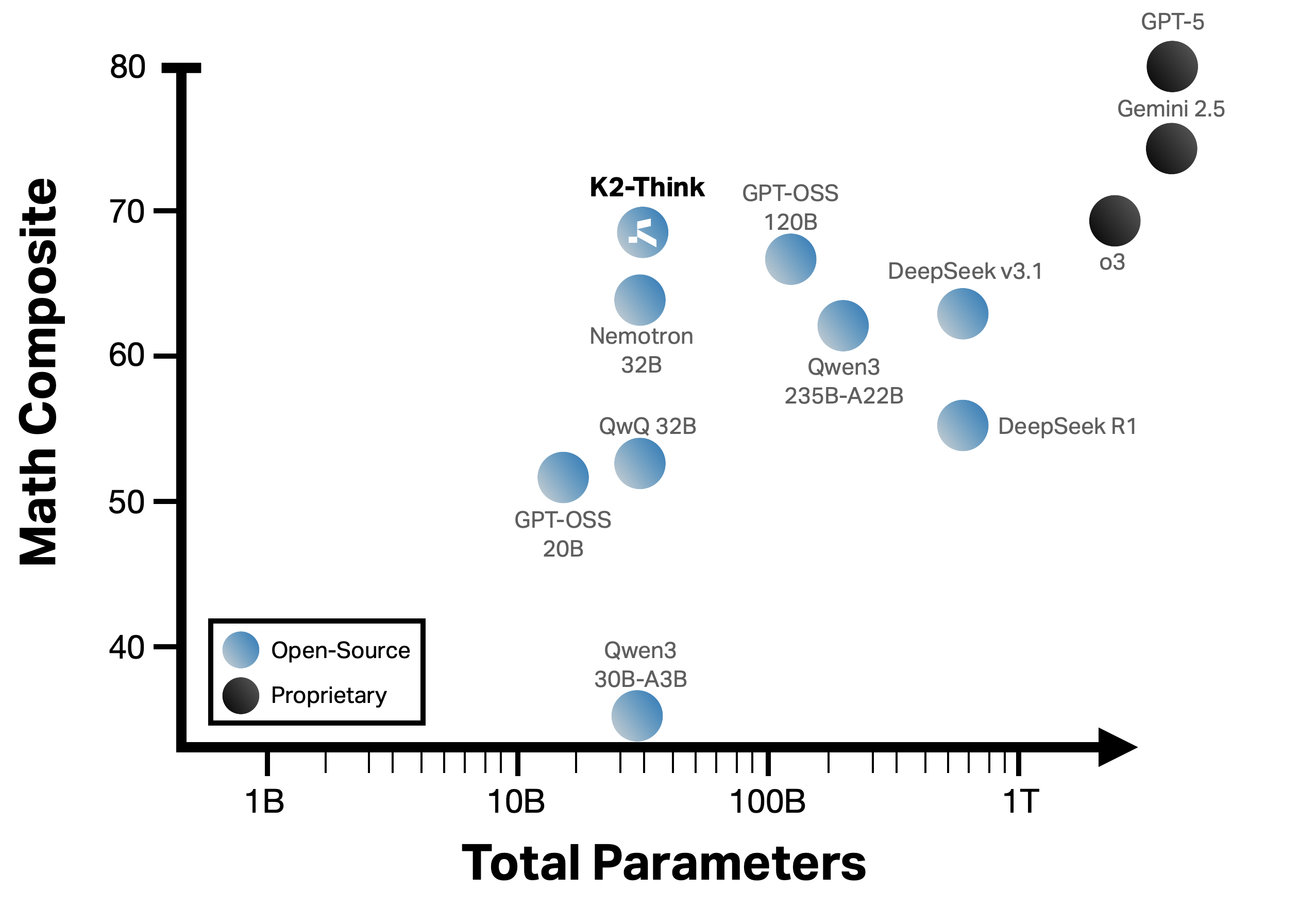

K2-Think: A Parameter-Efficient Reasoning System

Zhoujun Cheng, Richard Fan, Shibo Hao, Taylor W. Killian, Haonan Li, Suqi Sun, Hector Ren, Alexander Moreno, Daqian Zhang, Tianjun Zhong, Yuxin Xiong, Yuanzhe Hu, Yutao Xie, Xudong Han, Yuqi Wang, Varad Pimpalkhute, Yonghao Zhuang, Aaryamonvikram Singh, Xuezhi Liang, Anze Xie, Jianshu She, Desai Fan, Chengqian Gao, Liqun Ma, Mikhail Yurochkin, John Maggs, Xuezhe Ma, Guowei He, Zhiting Hu, Zhengzhong Liu, Eric P. Xing

MBZUAI IFM / LLM 360 Tech Report

Short Summary: K2-Think is a parameter-efficient reasoning system based on a 32B model.